K8S on Oracle Cloud - I Let's create a Kubernetes cluster using almost just free resources that Oracle provides us.

The aim here is to explore infra, do state management through Terraform, and prepare our own environment for pushing Docker images later on whenever we need to run a POC or a demo.

Create a Resource Manager Stack for an OKE cluster Let's walk through the UI to quickly generate a Terraform config for an OKE cluster.

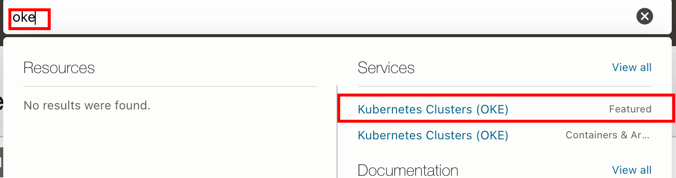

Search for "OKE" in the Oracle Cloud console

Click on "Create Cluster"

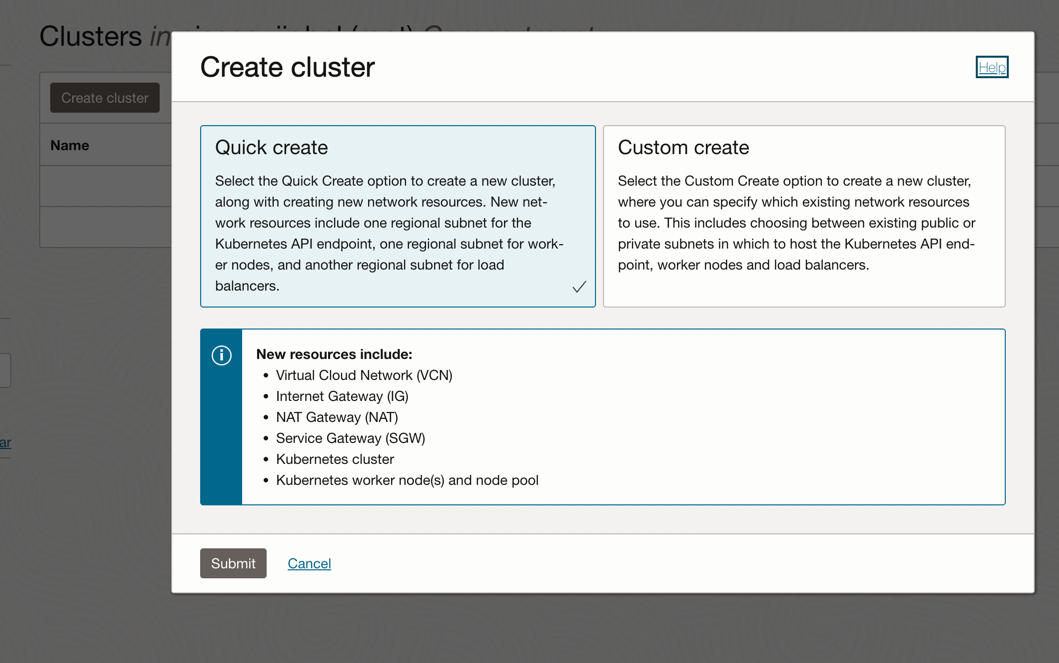

Choose "Quick Create" and hit "Submit"

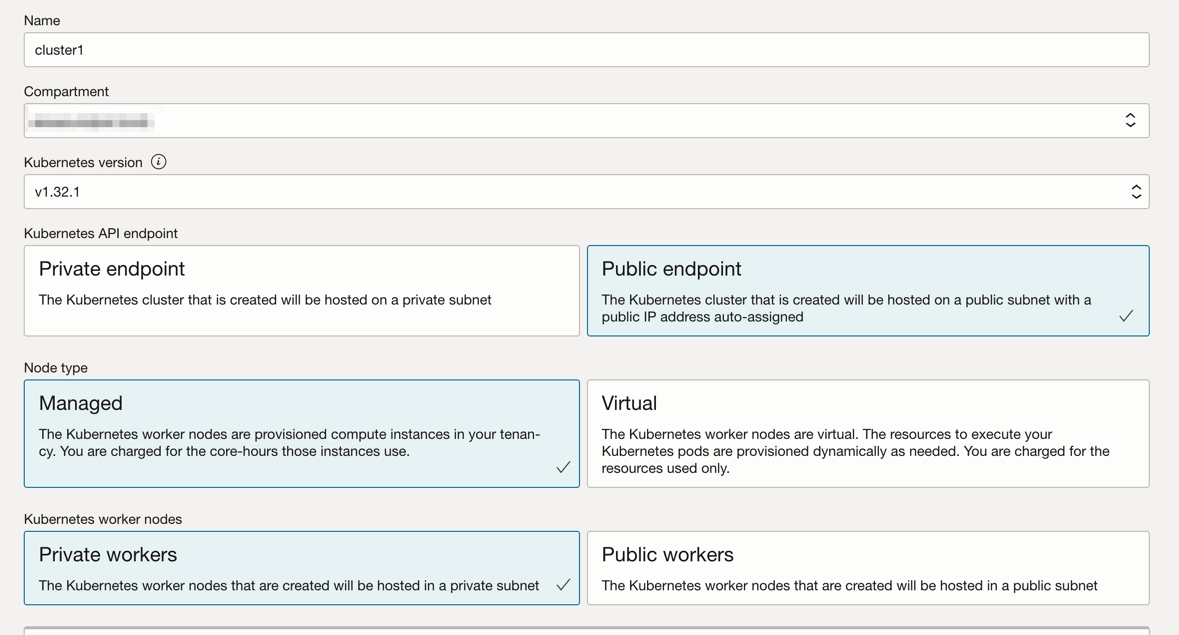

Choose:

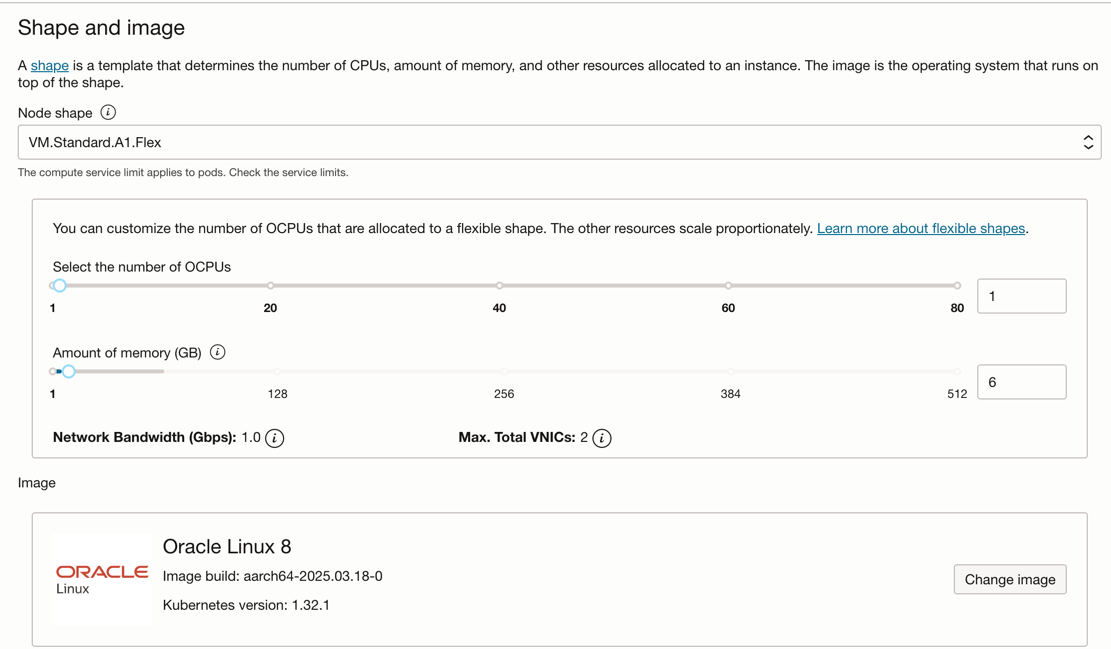

Shape and Image:

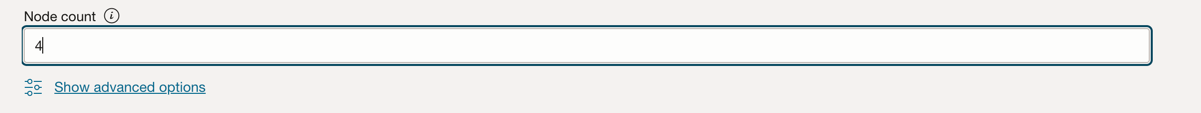

I'm using 4 worker nodes with 1 OCPU and 6 GB of memory each, boot volumes of ~50 GB each.

As you can see we will be running on the free tier Ampere A1 Flex shape: if you do not exceed 4 OCPUs and 24 GB and a max of 200 GB of storage on your home region, you will not be charged for compute resources that are running the nodes. You can allocate them flexibly as long as you stay within these limits. You can have a single worker node, or 2 x (2 OCPUs and 12 GB) or 4 x (1 OCPU and 6 GB) for instance.

Hit "Next" and review cluster settings:

Make sure to tick the Basic Cluster option:

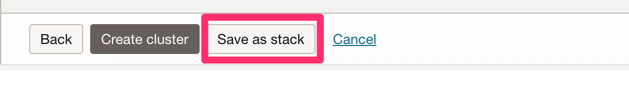

Instead of creating the cluster, we will create a stack in the Resource Manager:

Tracking cloud resources is not easy. Destruction as well might require a certain order of deletion, etc.

We will leverage the Stacks feature of the Resource Manager to create a Terraform config that we can use to manage our resources:

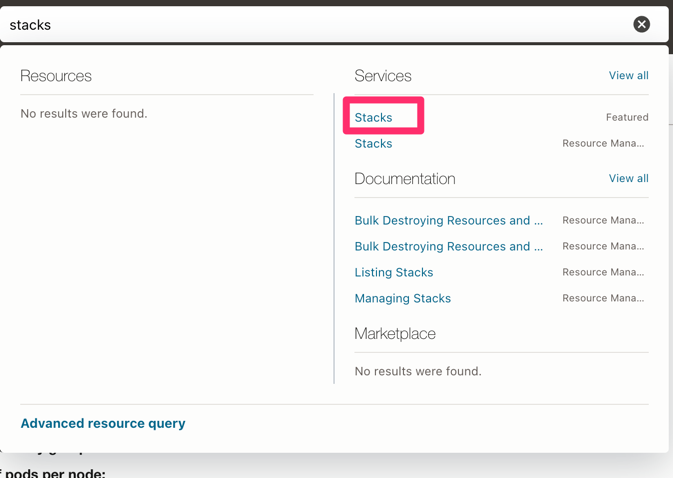

Search for "stacks" in the Oracle Cloud console

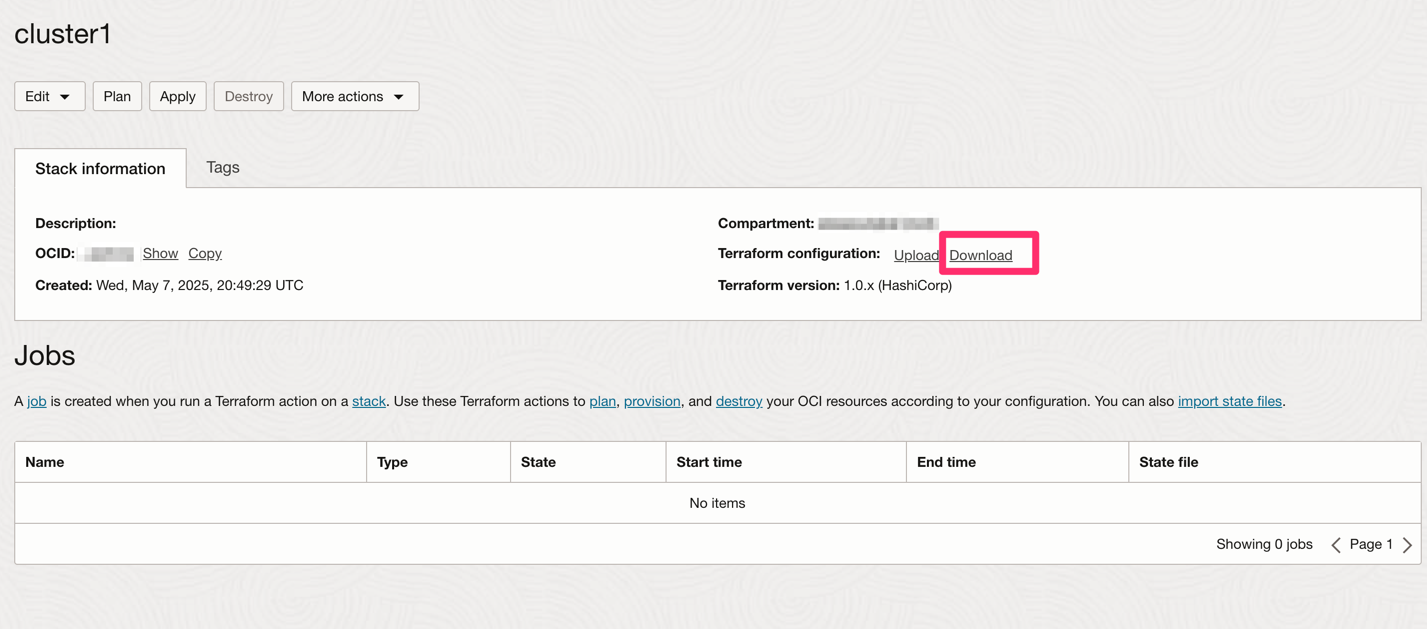

Choose the stack we created through the OKE wizard and download the Terraform config:

The generated main.tf file was almost complete:

terraform {

required_providers {

oci = {

source = "oracle/oci"

}

}

}

provider "oci" {}

resource "oci_core_vcn" "generated_oci_core_vcn" {

cidr_block = "10.0.0.0/16"

compartment_id = "<your_tenancy_ocid>"

display_name = "oke-vcn-quick-cluster1-515f8bd70"

dns_label = "cluster1"

}

resource "oci_core_internet_gateway" "generated_oci_core_internet_gateway" {

compartment_id = "<your_tenancy_ocid>"

display_name = "oke-igw-quick-cluster1-515f8bd70"

enabled = "true"

vcn_id = "${oci_core_vcn.generated_oci_core_vcn.id}"

}

resource "oci_core_nat_gateway" "generated_oci_core_nat_gateway" {

compartment_id = "<your_tenancy_ocid>"

display_name = "oke-ngw-quick-cluster1-515f8bd70"

vcn_id = "${oci_core_vcn.generated_oci_core_vcn.id}"

}

resource "oci_core_service_gateway" "generated_oci_core_service_gateway" {

compartment_id = "<your_tenancy_ocid>"

display_name = "oke-sgw-quick-cluster1-515f8bd70"

services {

service_id = "<your_service_gateway_ocid>"

}

vcn_id = "${oci_core_vcn.generated_oci_core_vcn.id}"

}

resource "oci_core_route_table" "generated_oci_core_route_table" {

compartment_id = "<your_tenancy_ocid>"

display_name = "oke-private-routetable-cluster1-515f8bd70"

route_rules {

description = "traffic to the internet"

destination = "0.0.0.0/0"

destination_type = "CIDR_BLOCK"

network_entity_id = "${oci_core_nat_gateway.generated_oci_core_nat_gateway.id}"

}

route_rules {

description = "traffic to OCI services"

destination = "all-<region>-services-in-oracle-services-network"

destination_type = "SERVICE_CIDR_BLOCK"

network_entity_id = "${oci_core_service_gateway.generated_oci_core_service_gateway.id}"

}

vcn_id = "${oci_core_vcn.generated_oci_core_vcn.id}"

}

resource "oci_core_subnet" "service_lb_subnet" {

cidr_block = "10.0.20.0/24"

compartment_id = "<your_tenancy_ocid>"

display_name = "oke-svclbsubnet-quick-cluster1-515f8bd70-regional"

dns_label = "lbsub8f5455efc"

prohibit_public_ip_on_vnic = "false"

route_table_id = "${oci_core_default_route_table.generated_oci_core_default_route_table.id}"

security_list_ids = ["${oci_core_vcn.generated_oci_core_vcn.default_security_list_id}"]

vcn_id = "${oci_core_vcn.generated_oci_core_vcn.id}"

}

resource "oci_core_subnet" "node_subnet" {

cidr_block = "10.0.10.0/24"

compartment_id = "<your_tenancy_ocid>"

display_name = "oke-nodesubnet-quick-cluster1-515f8bd70-regional"

dns_label = "sub534ec198a"

prohibit_public_ip_on_vnic = "true"

route_table_id = "${oci_core_route_table.generated_oci_core_route_table.id}"

security_list_ids = ["${oci_core_security_list.node_sec_list.id}"]

vcn_id = "${oci_core_vcn.generated_oci_core_vcn.id}"

}

resource "oci_core_subnet" "kubernetes_api_endpoint_subnet" {

cidr_block = "10.0.0.0/28"

compartment_id = "<your_tenancy_ocid>"

display_name = "oke-k8sApiEndpoint-subnet-quick-cluster1-515f8bd70-regional"

dns_label = "subbab4a1a47"

prohibit_public_ip_on_vnic = "false"

route_table_id = "${oci_core_default_route_table.generated_oci_core_default_route_table.id}"

security_list_ids = ["${oci_core_security_list.kubernetes_api_endpoint_sec_list.id}"]

vcn_id = "${oci_core_vcn.generated_oci_core_vcn.id}"

}

resource "oci_core_default_route_table" "generated_oci_core_default_route_table" {

display_name = "oke-public-routetable-cluster1-515f8bd70"

route_rules {

description = "traffic to/from internet"

destination = "0.0.0.0/0"

destination_type = "CIDR_BLOCK"

network_entity_id = "${oci_core_internet_gateway.generated_oci_core_internet_gateway.id}"

}

manage_default_resource_id = "${oci_core_vcn.generated_oci_core_vcn.default_route_table_id}"

}

resource "oci_core_security_list" "service_lb_sec_list" {

compartment_id = "<your_tenancy_ocid>"

display_name = "oke-svclbseclist-quick-cluster1-515f8bd70"

vcn_id = "${oci_core_vcn.generated_oci_core_vcn.id}"

}

resource "oci_core_security_list" "node_sec_list" {

compartment_id = "<your_tenancy_ocid>"

display_name = "oke-nodeseclist-quick-cluster1-515f8bd70"

egress_security_rules {

description = "Allow pods on one worker node to communicate with pods on other worker nodes"

destination = "10.0.10.0/24"

destination_type = "CIDR_BLOCK"

protocol = "all"

stateless = "false"

}

# … (other rules unchanged)

vcn_id = "${oci_core_vcn.generated_oci_core_vcn.id}"

}

resource "oci_core_security_list" "kubernetes_api_endpoint_sec_list" {

compartment_id = "<your_tenancy_ocid>"

display_name = "oke-k8sApiEndpoint-quick-cluster1-515f8bd70"

# … (rules unchanged)

vcn_id = "${oci_core_vcn.generated_oci_core_vcn.id}"

}

resource "oci_containerengine_cluster" "generated_oci_containerengine_cluster" {

cluster_pod_network_options {

cni_type = "OCI_VCN_IP_NATIVE"

}

compartment_id = "<your_tenancy_ocid>"

endpoint_config {

is_public_ip_enabled = "true"

subnet_id = "${oci_core_subnet.kubernetes_api_endpoint_subnet.id}"

}

freeform_tags = {

"OKEclusterName" = "cluster1"

}

kubernetes_version = "v1.32.1"

name = "cluster1"

options {

admission_controller_options {

is_pod_security_policy_enabled = "false"

}

persistent_volume_config {

freeform_tags = {

"OKEclusterName" = "cluster1"

}

}

service_lb_config {

freeform_tags = {

"OKEclusterName" = "cluster1"

}

}

service_lb_subnet_ids = ["${oci_core_subnet.service_lb_subnet.id}"]

}

type = "BASIC_CLUSTER"

vcn_id = "${oci_core_vcn.generated_oci_core_vcn.id}"

}

resource "oci_containerengine_node_pool" "create_node_pool_details0" {

cluster_id = "${oci_containerengine_cluster.generated_oci_containerengine_cluster.id}"

compartment_id = "<your_tenancy_ocid>"

freeform_tags = {

"OKEnodePoolName" = "pool1"

}

initial_node_labels {

key = "name"

value = "cluster1"

}

kubernetes_version = "v1.32.1"

name = "pool1"

node_config_details {

freeform_tags = {

"OKEnodePoolName" = "pool1"

}

node_pool_pod_network_option_details {

cni_type = "OCI_VCN_IP_NATIVE"

}

placement_configs {

availability_domain = "<your_ad_prefix>:<region>-<AD-1>"

subnet_id = "${oci_core_subnet.node_subnet.id}"

}

placement_configs {

availability_domain = "<your_ad_prefix>:<region>-<AD-2>"

subnet_id = "${oci_core_subnet.node_subnet.id}"

}

placement_configs {

availability_domain = "<your_ad_prefix>:<region>-<AD-3>"

subnet_id = "${oci_core_subnet.node_subnet.id}"

}

size = "4"

}

node_eviction_node_pool_settings {

eviction_grace_duration = "PT60M"

}

node_shape = "VM.Standard.A1.Flex"

node_shape_config {

memory_in_gbs = "6"

ocpus = "1"

}

node_source_details {

image_id = "<your_node_image_ocid>"

source_type = "IMAGE"

}

}

You will need to provide a subnet for the pod network option details, otherwise you will come across the following error:

oci_containerengine_node_pool.create_node_pool_details0: Creating...

╷

│ Error: can not omit nil fields for field: NodeConfigDetails, due to: can not omit nil fields for field: NodePoolPodNetworkOptionDetails, due to: can not omit nil fields for field: PodSubnetIds, due to: can not omit nil fields, data was expected to be a not-nil list

│

│ with oci_containerengine_node_pool.create_node_pool_details0,

│ on main.tf line 294, in resource "oci_containerengine_node_pool" "create_node_pool_details0":

│ 294: resource "oci_containerengine_node_pool" "create_node_pool_details0" {

To remedy this, we will reuse the node subnet for pods, or if you want better separation, you can create a new subnet and a new security list for the pods.

Under the node pool resource oci_containerengine_node_pool:

node_pool_pod_network_option_details {

cni_type = "OCI_VCN_IP_NATIVE"

pod_subnet_ids = [oci_core_subnet.node_subnet.id] # 👈 put actual subnet(s) here

}

and you can be specific about pulling the right provider:

terraform {

required_providers {

oci = {

source = "oracle/oci"

}

}

}

Terraform can pick up the OCI env variables from your shell even without the TF_VAR prefix:

export OCI_CLI_PROFILE=default

export OCI_CLI_USER="<your_user_ocid>"

export OCI_CLI_TENANCY="<your_tenancy_ocid>"

export OCI_CLI_REGION="eu-zurich-1"

export OCI_CLI_FINGERPRINT="<your_api_key_fingerprint>"

export OCI_CLI_KEY_FILE="~/keys/oci_api_key.pem"

and run:

terraform init

terraform plan

terraform apply

13 July 2025